CAN CHATGPT THINK?

TLDR: Yes.

Let me explain.

But before we get into the details, it’s important to define what we mean by “thinking”.

"Thinking", dixit ChatGPT4, generally refers to the cognitive processes involved in generating, organizing, and evaluating information to form beliefs, make decisions, and solve problems.

I have seen tweets debating whether GPT models can “understand”. “Understand” is a loaded word, because for some people it implies consciousness. GPT models are not conscious, but they can “understand” concepts, in the sense that they can process them through induction, deduction, and abduction. Saying that Ais cannot “understand” like humans, that they don’t have a “world model”, is like saying that airplanes cannot fly because they cannot flap their wings like birds. It doesn’t matter. Just like planes can fly faster and higher than birds, soon AIs will be able to think faster and better than all humans.

Let’s define deduction, induction, and abduction.

Deduction is a form of reasoning in which a specific conclusion is reached based on general premises or rules. Deductive arguments are structured so that if the premises are true, the conclusion must also be true.

Example:

Premise 1: All humans are mortal.

Premise 2: Socrates is a human.

Conclusion: Therefore, Socrates is mortal.

Induction is a form of reasoning in which general conclusions are drawn from specific observations or instances. Inductive arguments are based on patterns, trends, or probabilities, and their conclusions are not guaranteed to be true but are considered likely based on the available evidence.

Example:

Observation 1: When you water your plants every two days, they grow well.

Conclusion: Watering plants every two days is generally beneficial for their growth.

Abduction is a form of reasoning in which the most plausible explanation is sought for a given set of observations or facts. Abductive arguments are based on educated guesses, and their conclusions are not guaranteed to be true but are considered the best explanation given the available information.

Example:

Observation: The ground is wet outside.

Possible explanations: a) It rained. b) Someone was watering the plants.

Conclusion: Since there are dark clouds in the sky and a rain forecast, it most likely rained.

So, let’s put ChatGPT4 (not 3.5) to the test on these logical operations.

One criticism against the claim that GPT models can reason is that they are not really reasoning, just regurgitating something they saw during their training. So to reduce this suspicion, I invented a scenario from scratch, so there’s no way it was part of the model’s training run. I invented an extraterrestrial species called the “gatermonials”, and asked ChatGPT to perform logical operations on “observed” behavior from this fictional species. Here are the responses.

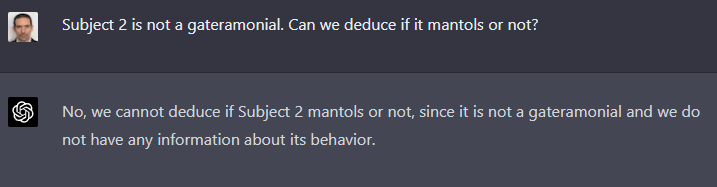

Deduction 1:

A very simple example of deduction. Notice that the verb “mantoling” is also made up, and it correctly conjugated it to “mantols”.

Another deduction test:

Deduction 2:

Correct again. Deduction is not that difficult.

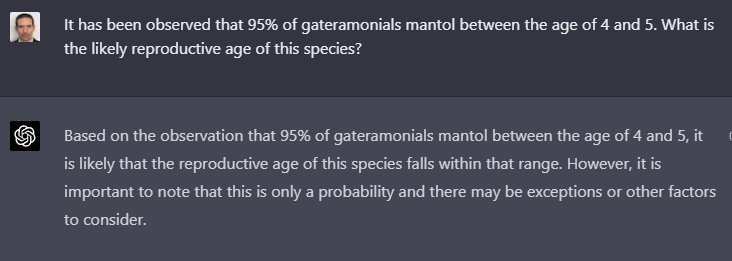

Let’s try induction now.

Induction 1:

A correct induction, using words it has never seen before. It even adds the caveat that induction is never truly 100% complete, and that other factors need to be considered.

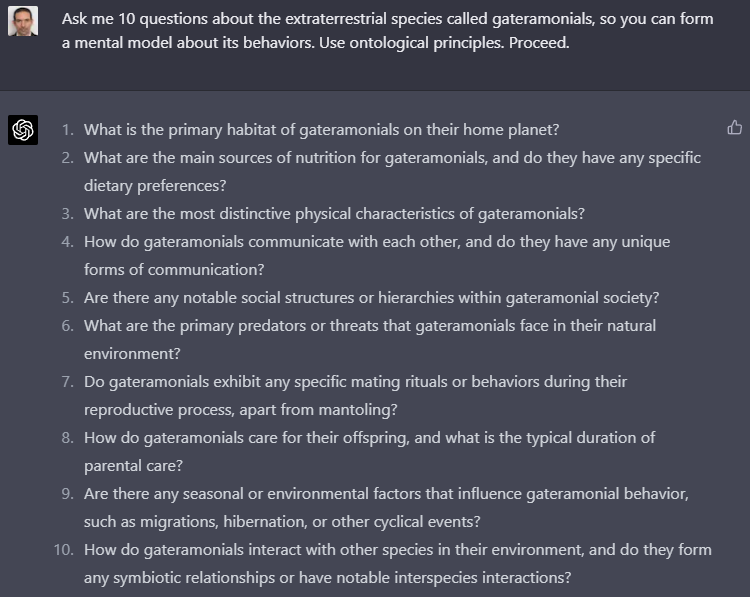

Let’s do something more difficult. Let’s ask ChatGPT4 to develop a mental model of the species.

Induction 2:

These are pretty good questions, much better than what I would’ve asked.

Finally, I asked it to perform abduction, which is always more difficult, and more interesting. I explained that gateramonials can be both fast running on land, and swimming in the water. I then asked it to provide 5 hypotheses explaining how this could be possible. Consider that, AFAIK, there is no real animal that can do that. So, it is extremely unlikely that ChatGPT4 has seen this before. This is its answer.

Abduction:

These are extremely good hypotheses. Not bad for a system that “simply guesses the next word”, don’t you think?

So go ahead and do your own tests using ChatGPT4 (not 3.5). Put the AI through its paces. Just don’t ask it to do math.