WHY CAN’T CHATGPT COUNT?

April 1st, 2023

If you use ChatGPT enough, you soon may realize it’s really bad at counting. And not only counting, but math in general.

To understand its counting capabilities, I ran an experiment. I created a Python script that generates strings of random words, starting with one word, then 2, then 3, etc., and asked, via the API, GTP 3.5 to count how many words there are. Here’s a screenshot of the output. The first number after each string is the actual number of words; the second number is GPT’s count, and the third number is the difference between the two. (These tests where run using GPT 3.5, on March 13th, 2023)

As you can see, it can count fine up to about 10 or so. After that, it starts to get it wrong. Take a look at this graph:

The blue line shows the actual number of words in the string presented to GPT. The red line is GPT’s count. As you can see, initially it gets it right. Then it starts to deviate a little. But after 100 words, it pretty much just estimates the ballpark number, eventually oscillating by an order of magnitude.

What I find fascinating is that us humans are basically the same. Let me explain. Counting is a learned skill. Counting and math are not part of our language instinct. You don’t need to teach children to talk; they have the brain hardware and software pre-made to learn language; they just need to be exposed to it, and they will pick it up. Not so with counting and math. You need to teach children these skills.

When we ask GPT “count these words”, it’s like asking a child who has not been taught how to do so. GPT can get the first 10 or so right, because it has been exposed to training content of the sort of “this are the 10 reasons why you should leave your boyfriend”. It has not been sufficiently exposed to labeled lists with hundreds, or thousands of elements. So, there are no weight and biases in the neural network that can properly respond to a counting question. I find it amazing it can ballpark the number, and have no idea how it does it.

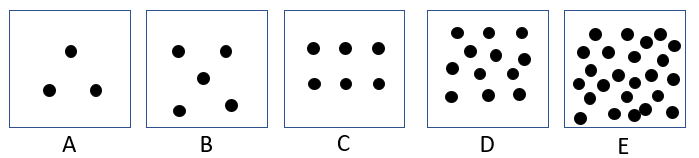

Going back to the human analogy, look at this image:

If I ask you to guess the number of dots, without actually counting them one by one, you will no doubt get A, B, and C right. You just might get D. But it’s very unlikely you’ll get E. Your brain is just not trained to do so. Something similar is happening in GPT’s neural network.

But, the interesting thing is, you CAN teach GPT to count, explaining how to do it, in other words, teaching it the algorithm. This is the instruction I gave ChatGPT 3.5:

In mathematical terms, I asked it to perform a “bijection”.

And this is what it responded:

Which is correct. We taught ChatGPT how to count.

But only up to a point. Occasionally it still gets it wrong, like a little kid.

And for the same reasons, ChatGPT generally sucks at math; it just doesn't have the math algorithms.

The best option is to outsource that part to some other software. For example, you can tell GPT (using the API) that if the user asks for a math question, create a Python script, execute it, and give the result. This is the approach being implemented in ChatGPT Plus with plugins.

But this is my point. For the most part, you cannot just tell ChatGPT “do this”. Sometimes that will work, often it will not, and you will blame ChatGPT, when in reality, it’s your fault for not applying proper prompt engineering. ChatGPT is a tool, and you need to know how to use it. If you get into a car without knowing how to drive, and you crash, it’s your fault, not the car’s. It’s amazing the number of articles and tweets out there of people using ChatGPT for the first time for 5 minutes, not getting the results they expected, and dismissing it as a useless “stochastic parrot”. It’s a tool. Learn to use it.